Technical Architecture

OSO's goal is to make it simple to contribute by providing an automatically deployed data pipeline so that the community can build this open data warehouse together. All of the code for this architecture is available to view/copy/redeploy from the OSO Monorepo.

Pipeline Overview

OSO maintains an ETL data pipeline that is continuously deployed from our monorepo and regularly indexes all available event data about projects in the oss-directory.

- Extract: raw event data from a variety of public data sources (e.g., GitHub, blockchains, npm, Open Collective)

- Transform: the raw data into impact metrics and impact vectors per project (e.g., # of active developers)

- Load: the results into various OSO data products (e.g., our API, website, widgets)

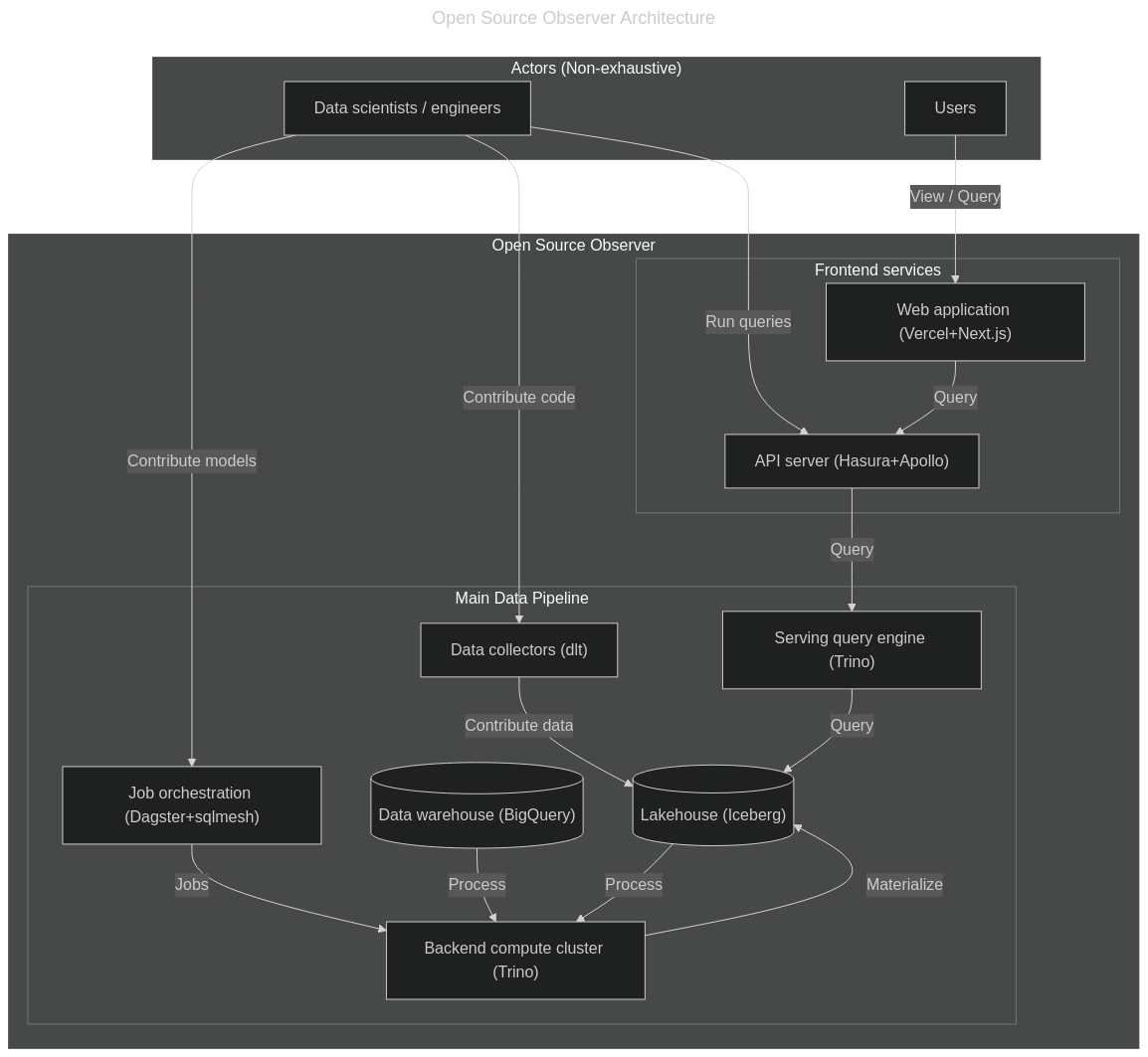

The following diagram illustrates Open Source Observer's technical architecture.

Major Components

The architecture has the following major components.

Data Orchestration

Dagster is the central data orchestration system, which manages the entire pipeline, from the data ingestion (e.g. via dlt connectors) to the sqlmesh pipeline.

You can see our public Dagster dashboard at https://dagster.opensource.observer/.

Data Lakehouse

Currently all data is stored in managed Iceberg tables.

We also make heavy use of public datasets from Google BigQuery. To see all BigQuery datasets that you can subscribe to, check out our Data Overview.

sqlmesh pipeline

We use a sqlmesh pipeline to clean and normalize the data into a universal event table and metrics. You can read more about our event model here.

Trino clusters

We maintain separate Trino clusters the operate over the Iceberg tables:

- Production pipeline cluster - a read-write cluster to run the sqlmesh pipeline

- Consumer query cluster - a read-only cluster to serve the API and

pyoso

API service

We use Hasura to automatically generate a GraphQL API from our consumer Trino cluster. We then use an Apollo Router to service user queries to the public. The API can be used by external developers to integrate data from OSO. Rate limits or subscription pricing may apply to its usage depending on the systems used. This also powers the OSO website.

OSO Website

The OSO website is served at https://www.opensource.observer. This website provides an easy to use public view into the data. We currently use Next.js hosted by Vercel.

Open Architecture for Open Source Data

The architecture is designed to be fully open to maximize open source collaboration. With contributions and guidance from the community, we want Open Source Observer to evolve as we better understand what impact looks like in different domains.

All code is open source in our monorepo. All data, including every stage in our pipeline, is publicly available via pyoso. All data orchestration is visible in our public Dagster dashboard.

You can read more about our open philosophy on our blog.